In an effort to bolster transparency, Meta — the parent company for Facebook, Instagram, and Threads — is implementing new measures on its platforms. The company recently made an announcement about its plans to label content created using artificial intelligence (AI) and its goal is to provide users with clearer context about what they see and share on social media.

The company will begin this initiative by labeling images, stating that “In the near future we will start adding labels to images created by AI tools across Facebook, Instagram, and Threads.” It is currently working on an automated detection system that can identify markers commonly used for AI-generated content, including watermarks and metadata. Meta’s initial focus will be on images, but the long-term goal is to be able to identify AI-generated videos and audio as well.

Meta already applies the “Imagined with AI” label on images created with Meta AI, and by working with other industry partners, the company hopes to have the new detection capabilities ready in the coming months for content created outside of Meta’s own tools. So far, the company has determined that it is capable of labeling images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock.

However, a hurdle presents when it comes to video and audio. Because tools that generate this type of content have not yet widely embraced standardized markers, it is difficult for Meta to detect them.

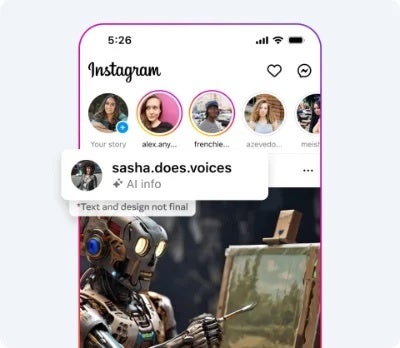

In order to address this issue, Meta has announced its intention to launch a new “disclosure and label tool,” which will enable the users to manually indicate when video and audio content has been generated using AI. Users will be required to make this disclosure for digitally created or altered “photorealistic videos or realistic-sounding audio,” and Meta has the authority to enforce penalties on those who do not comply.

Meta is experimenting with different ways the new “disclosure and label tool” will appear on a post

There is a growing concern about the potential misuse of AI-generated content, leading to a push for labeling. Deepfakes, for example, have the potential to be incredibly realistic, which can be concerning when it comes to spreading misinformation and manipulating content — specially when it comes to things like the upcoming U.S. elections.

Although not a perfect solution, particularly when relying on the user to label video and audio, Meta is hoping that the detection system will be effective and not easily bypassed. It remains to be seen if Meta’s approach will be successful in achieving its goals, but the initiative certainly ignites an important discussion about the responsible use of AI across social media.